James Perkins

10 Essential Cloud Questions

Cloud migration is a key tenant to enhancing operational efficiency, scalability, and resiliency. Shifting to cloud not only supports lowering TCO but can also increase agility to meet market demand. Even so cloud journeys require thoughtful consideration.

When it comes to delivering real-time data in and around the cloud, there are complex dependencies to think through. The following presents ten essential questions to consider before migrating any real-time estate to the cloud. Addressing these will support a smoother transition and post-migration experience.

1. What are your latency requirements?

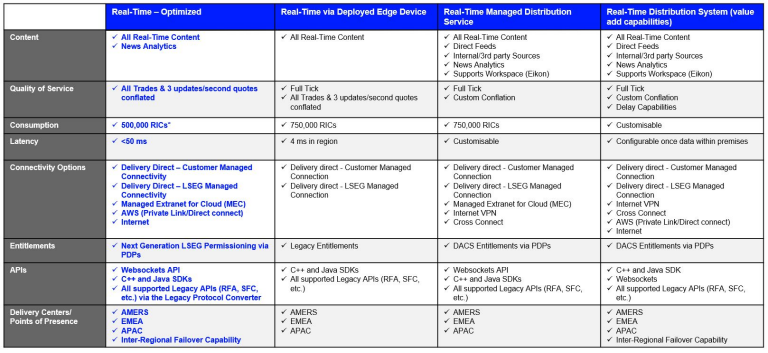

Top of mind is latency! The time it takes for data to travel from the point of origin to target applications and systems can affect decision-making and operational efficiency. Having the right tech stack and cost structure in place to support required latency is a good starting place. From 4 milliseconds to 1 second+ LSEG provides a range of cloud-ready real-time delivery options across the latency spectrum. This ranges from trade-safe conflated Real-Time Optimized (RTO) to Real-Time - Full Tick (RT - FT). For latency requirements faster than 4ms please review LSEG’s Low Latency Services.

2. What is your volume of data consumption?

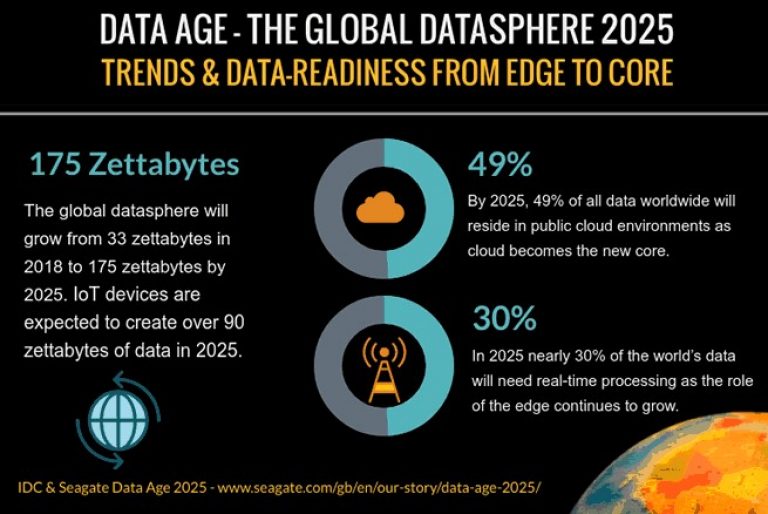

The volume of real-time data consumed is a key factor in sizing any cloud journey. In practical terms, this translates into the security universe you need priced throughout the day; also known as a “watch list” size and/or unique instruments. As an initial basis for volume sizing, take the watch list size and multiply it by the latency factor. For example, if a list of one million futures and options contracts needs to be refreshed throughout a market session every four milliseconds, this requires ≈25 million refreshes a day. On the other hand, a watchlist size of 100 FX rates updating every second only requires ≈25 thousand refreshes a day. Within this wide spectrum, bandwidth, compute, and storage (if needed) must be in place to handle the demand. One general rule of thumb is that data consumption rates YoY continue to rise across the board. Budget in growth.

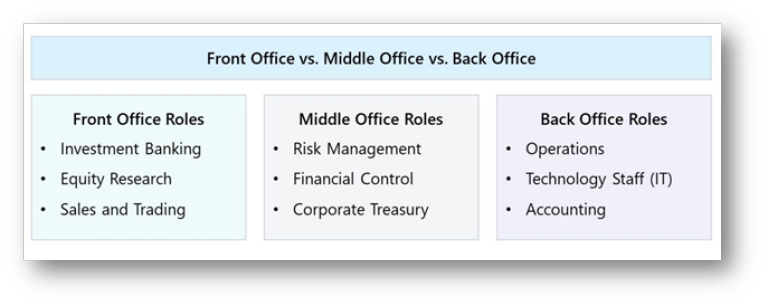

Business functions requiring real-time market data are diverse. However, most financial-related real-time data activities fall within what is designated as Front Office, Middle Office, or Back Office. These labels reflect the trade life cycle, which includes pre-trade, trade execution, and post-trade activities. While these segmentations don’t necessarily represent different core data needs, they do play into latency, volume, and even data licensing. For example, while Sales & Trading require sub-second latency for trade execution, it’s unusual for risk management or accounting functions to have the same urgency for data refreshes. Under many licensing terms specific use cases and/ or applications are defined. This provides clarity on where the data is flowing and for what purposes it’s being used. i.e., under a Front Office business activity, real-time data would not be feeding an accounting system.

4. What Qualities of Service (QoS) are needed?

Qualities of Service (QoS) is like latency as it measures data flows per unit of time. However, it’s different in that it is imposed after latency, and adds custom rules to inbound data. Latency is a factor of technology, solution build, and connectivity. On the other hand, QoS makes additional choices as to when and how applications and end users receive the real-time data. QoS can be used to protect infrastructure, control costs, or manage regulatory and compliance mandates.

An example of QoS in action is LSEG’s Real-Time Optimized (RTO) solution. RTO provides trade safe conflated data. This means that all TRADE updates are passed straight through without conflation while only QUOTE updates are conflated. In other words, all the trade data is fully received, but quotes, which account for most of the daily message volumes, are limited. Behind the scenes a traffic management system (TMS) throttles the data to ensure proper routing and compliance with defined rules.

When considering cloud deployment, choosing, and understanding QoS will lead to a better experience. QoS ranges from custom full tick to conflated and delayed.

5. Which connectivity options suit your business needs?

Connectivity is the foundation of any real-time data deployment. Like pipes carrying water you need to consider how much data and how fast that data needs to travel to determine the best pipe size. Equally important is understanding where the pipes can connect. This is where it’s helpful to review and understand cloud egress (outbound) and ingress (inbound) bandwidth limits (5Gbps, 10Gbps, 15Gbps, 20Gbps+…?) and data transfer costs.

For the longest time cloud connectivity was seen as a siloed endeavor. However, with the advent of multi-cloud platforms there is now more flexibility. To provide added options LSEG offers a range of connectivity, including PrivateLink and Managed Extranet Connectivity (MEC). This offers connectivity for those hosted in different public cloud providers.

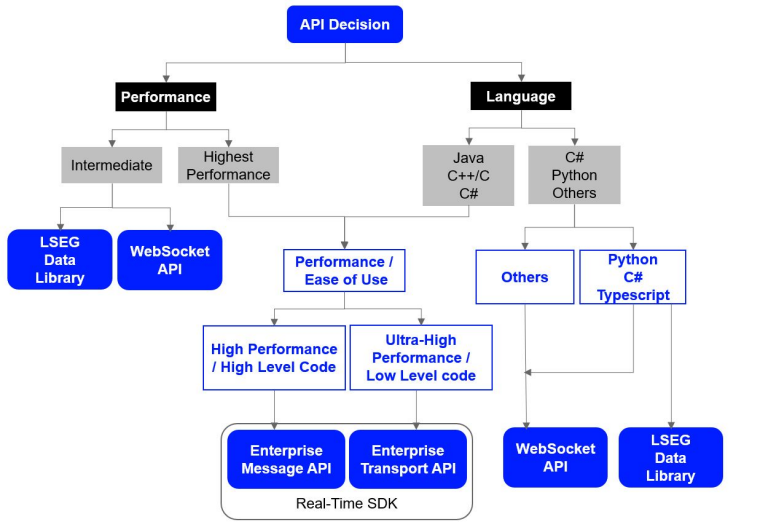

6. Which APIs best support your workflow?

The choice of API and integration capabilities is needed to ensure cloud services support specific use case needs. These decisions are driven by either a preference for a specific programming language based on skillsets and/or accessibility, or by specific performance requirements. To support these decisions LSEG offers various APIs including WebSocket, Strategic APIs, and SDKs for C++ and Java, facilitating wide integration with systems. Review Real-Time Market Data APIs & Distribution. You may also find our session on Demystifying Real-Time APIs useful.

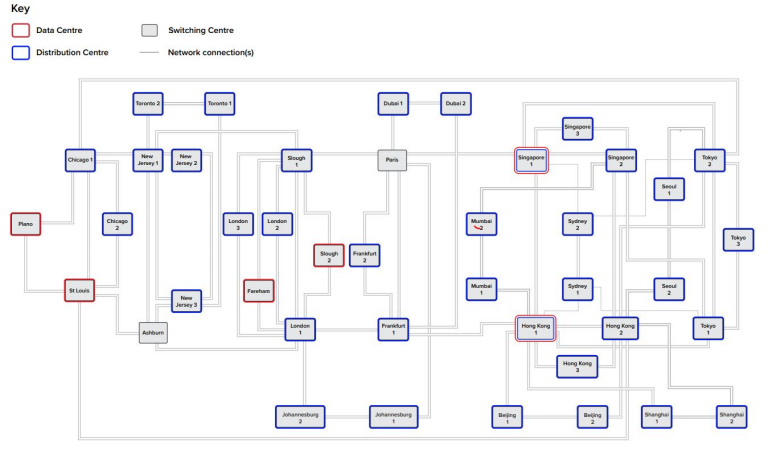

7. How do delivery centers affect your data latency and accessibility?

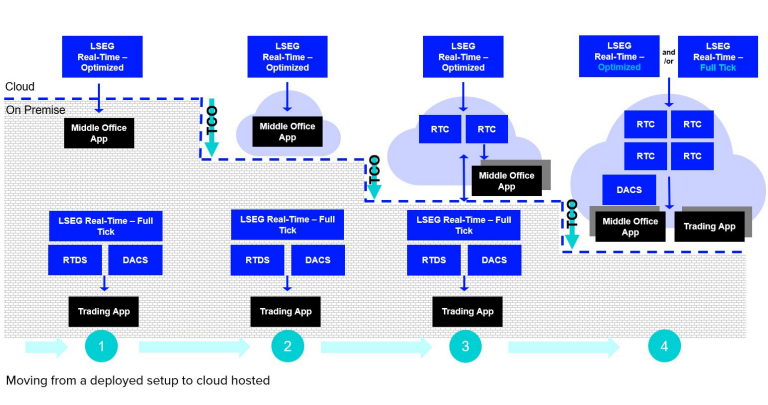

With managing global dataflows there are added regional and connectivity considerations. Most real-time data use cases can be supported fully in cloud. However, in many cases there remain drivers to maintain legacy on premise presence. Hybrid deployments straddling cloud + private data centers can be even more involved.

For example, data latency can be significantly influenced by the geographical distance between data centers and end-users. Closer proximity results in lower latency, enhancing the performance of time-sensitive applications.

LSEG's network of delivery centers, including Points of Presence (PoPs) and Availability Zones (AZs), are designed to ensure high availability services from multiple locations globally (natively in cloud or on premises), and customers can choose a service from a center which meets their availability and latency requirements.

8. Do you have the right expertise and support?

When people talk about migrating to the cloud it’s both a strategic and a tactical event. Strategic because it outlines a vision of the future, tactical because it means creating project plans and migrating individual applications and/or processes. Each application or process has a unique set of requirements and a unique set of expertise needed to manage. Even when all the technical resources are in place there are still nuances around connectivity, latency, and data. i.e., real-time equity options data is structured differently from US Treasuries, or FX Rates. Having the right data and technological knowledge is key to success.

To supplement, and/or partner with in-house expertise, LSEG provides a full range of consulting services with SMEs spanning solutions architects, data and technical consultants, project managers, and business consultants. This includes cloud migration services such as Data Replication and post-migration maintenance and operations. Find out more here.

9. How does AI support your cloud use case?

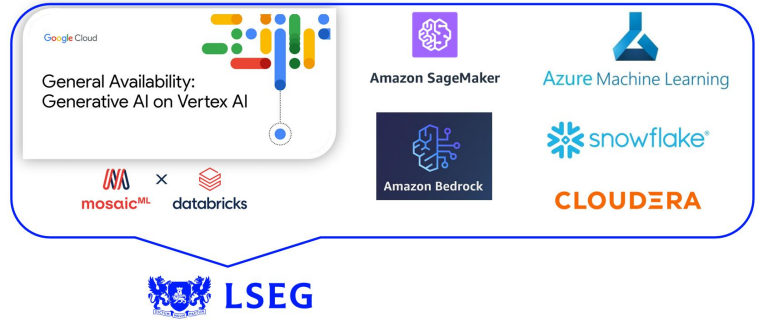

The cloud landscape and eco system are rapidly evolving, and AI is at the center. All public cloud providers offer a growing menu of AI services. From Google Vertex AI to AWS SageMaker and Bedrock, to Azure ML, each touts unique strengths and capabilities. Beyond training and deploying LLMs, these AI services play an increasing role in ongoing data management, monitoring, reporting, and optimizing performance across data, compute, and storage. Cross cloud data warehouse and integration providers like Snowflake, Databricks, and Cloudera also now highlight their AI services including Cortex AI, Mosaic AI, and Cloudera AI respectively.

*It is important to highlight that LSEG is cloud collaborative and partners with all cloud providers. LSEG maintains presence across the full cloud ecosystem. To see some of the ways we’re incorporating AI check out Empowering financial research with AI.

10. What will my total cost of ownership (TCO) be?

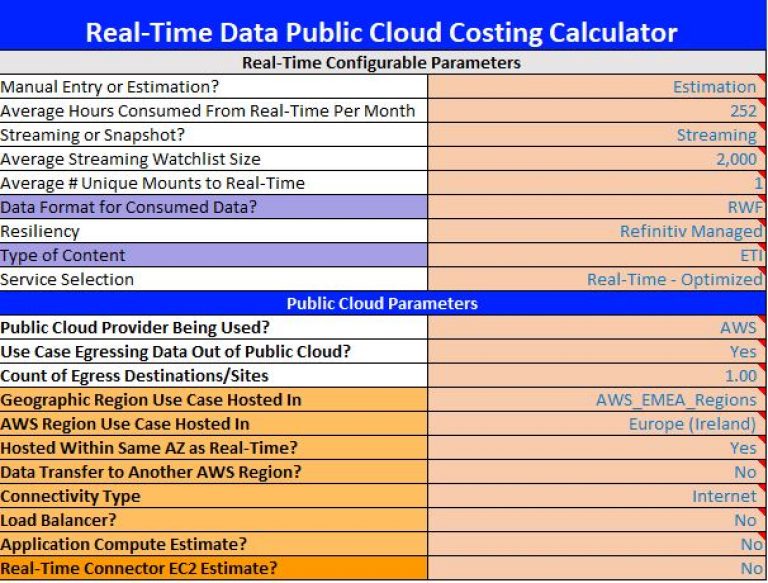

A final consideration in any cloud journey is how it affects the bottom line. With a variety of public cloud services, and pay as you go pricing models, it can be challenging to understand the out-of-pocket costs. To aid in discovery, LSEG has created a Real-Time Public Cloud Cost Calculator. With this tool TCO estimations can be made for Real-Time data consumption in Azure, AWS, and GCP.

Final thoughts

Choosing the right cloud deployment requires careful consideration across numerous factors. From latency and connectivity requirements to data use and AI, by addressing these ten essential questions, stakeholders can set themselves up for cloud success. Please reach out to your LSEG Account Team to learn more about LSEG cloud solutions. You can also watch the replay of our Academy session LSEG in the Cloud.